On Jan. 7, Meta, the company that owns Facebook, Instagram and Threads, announced it is ending its fact-checking program and making multiple changes to its hateful conduct policy. Critics claim these changes will increase the spread of misinformation and bigotry on Meta’s platforms.

The policy changes include removing third-party fact-checking and reducing hateful speech restrictions surrounding major political and social issues, including immigration, sexual orientation and gender expression.

In a Facebook post announcing these changes, Mark Zuckerberg, CEO of Meta, said, “It’s time to get back to our roots around free expression on Facebook and Instagram”.

Meta introduced its fact-checking program in 2016 after critics questioned the company’s role in spreading misinformation during the 2016 presidential election. Now, Zuckerberg says that the program is “too biased.”

“After Trump first got elected in 2016, the legacy media wrote nonstop about how misinformation was a threat to democracy,” Zuckerberg said, “We tried in good faith to address those concerns without becoming the arbiters of truth, but fact-checkers have just been too politically biased.”

Though studies have shown that fact-checkers flag conservative users more, research also shows this is likely due to people viewing news shared by conservatives as untrustworthy. One researcher said conservatism is one of the best predictors of misinformation consumption.

Regardless, fact-checking will be replaced by Community Notes, based on X’s, formerly known as Twitter, system.

“We’ve seen this approach work on X – where they empower their community to decide when posts are potentially misleading and need more context, and people across a diverse range of perspectives decide what sort of context is helpful for other users to see,” wrote Joel Kaplin, Chief Global Affairs Officer at Meta.

This system allows approved contributors, i.e. users with an active phone number who have no violations and registered for six months, to add context under a post through notes.

Users can vote whether X should add the note after a user suggests one. If it passes, an algorithm checks to see if the voting gallery is politically diverse. If it is, the note will be published. However, the note will never be published, regardless of accuracy if the algorithm says the users are not ideologically diverse enough.

Dr. Zachary Beare, an associate professor of English at NC State, said Community Notes isn’t an alternative to fact-checking. “Community Notes are dependent on consensus. And so if there isn’t consensus — you have two people that are just fundamentally opposed on a particular issue — consensus will not be made,” he explained.

The Center for Countering Digital Hate found that the system of Community Notes failed to accurately correct US election misinformation 74% of the time.

Meta also announced changes in their hateful conduct policies.

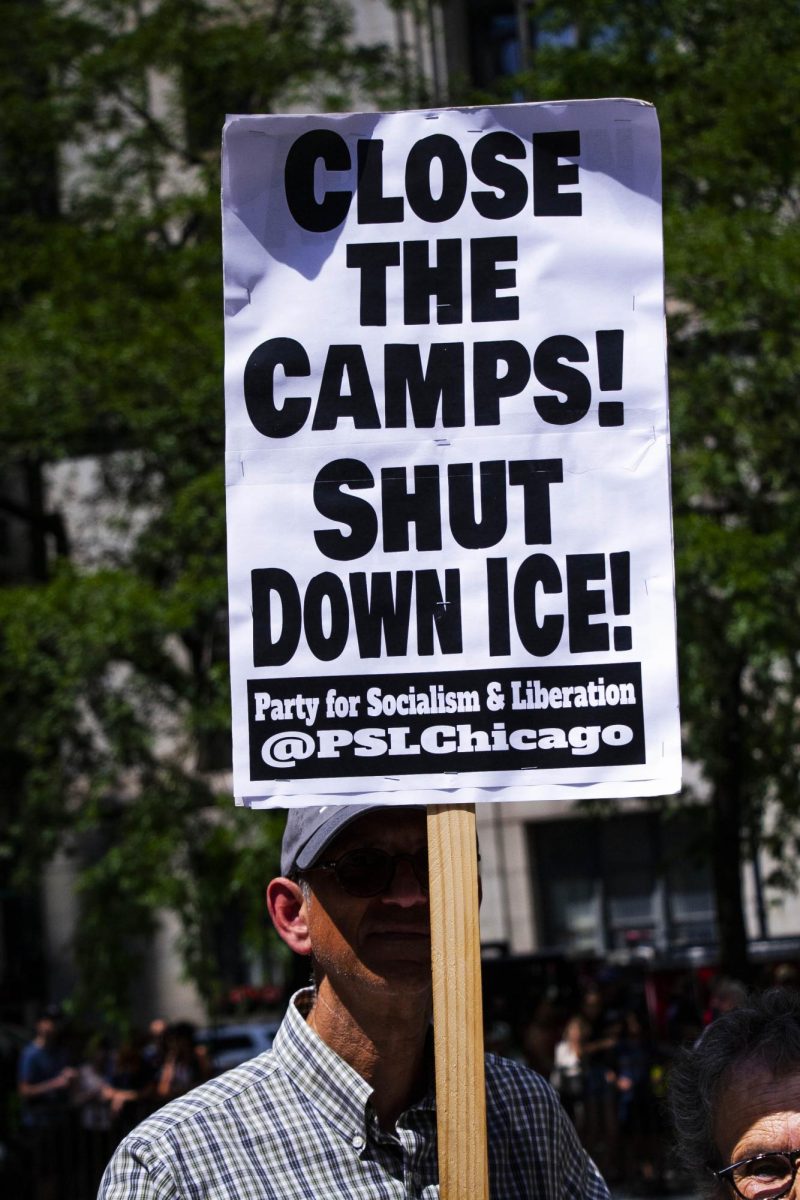

Regarding immigration, Meta’s policy says, “We also protect refugees, migrants, immigrants, and asylum seekers from the most severe attacks (Tier 1 below), though we do allow commentary on and criticism of immigration policies.”

The policy also updates Meta’s stance on sexuality and gender identity, saying, “We do allow allegations of mental illness or abnormality when based on gender or sexual orientation, given political and religious discourse.”

This is, despite homosexuality and being transgender not being considered mental illnesses per the American Psychiatric Association’s Diagnostic and the Statistical Manual of Mental Disorders. The rules regarding mental illness allegations only apply to these issues; any other allegations of mental illness remain prohibited.

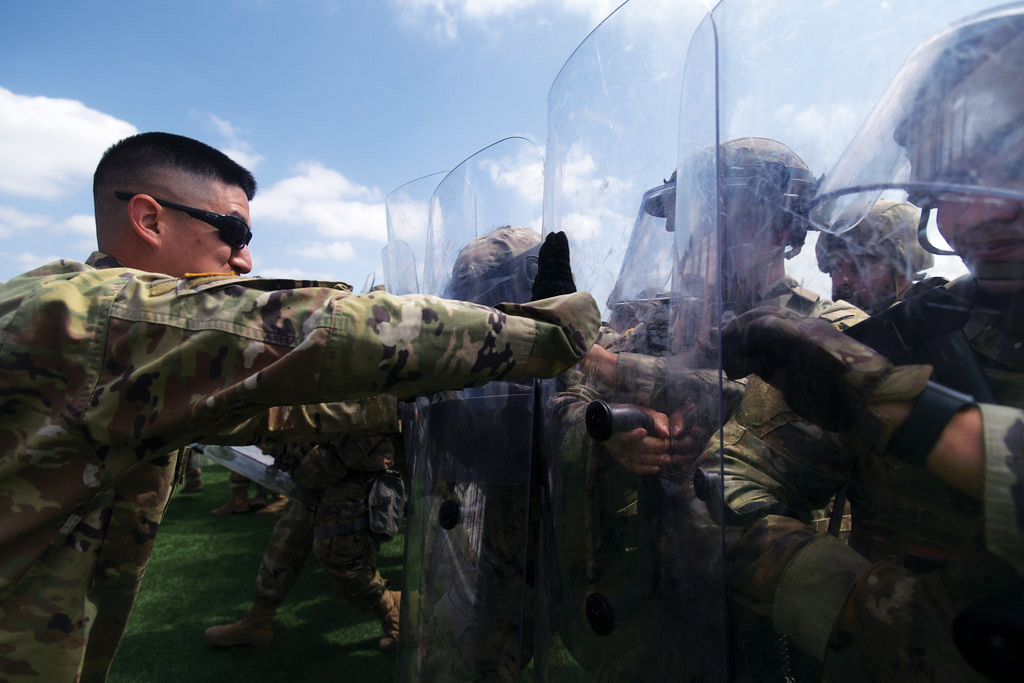

The hateful conduct policy also features changes to gender-based discriminatory policies, “We do allow content arguing for gender-based limitations of military, law enforcement, and teaching jobs.”

Meta also said that insulting and calling for the exclusion of LGBTQ+ users and immigrants, as well as the insulting of a gender because of a breakup are allowed. “Our policies are designed to allow room for these types of speech,” the policy states.

Despite these changes, Meta says it still protects people with protected characteristics, which Meta defines as “race, ethnicity, national origin, disability, religious affiliation, caste, sexual orientation, sex, gender identity, and serious disease,” from direct attacks.

These changes have come under criticism from several organizations. The Human Rights Campaign said that the hateful conduct policy changes, “legitimize–and arguably even incentivize–discrimination.”

GLAAD also criticized the changes in a press release on Jan. 10, 2025. “With these changes, Meta and Mark Zuckerberg are now not only permitting and encouraging, but engaging in anti-LGBTQ hate speech,” GLAAD said. GLAAD also said the policy’s usage of terms co-opted by the right-wingers to delegitimize trans people encourages anti-LGBTQ violence.

Dr. Beare said the hateful conduct changes will encourage users to spread the now-permitted content. “I think that it enables them to see platforms like Meta-owned companies like Facebook and Instagram as a sort of welcoming space to that sort of content in a lot of ways,” he said.

“There’s an abundance of research already that shows that social media, because of its asynchronous nature, because of the level of mediation and distancing that is provided by screens allows people to be more intense in their rhetorical actions, to be more hateful, more aggressive. And I think that this change in policy for Meta is only going to sort of further enable that,” Dr. Beare said.

Meta isn’t the only platform to change its hateful conduct policy in recent years. In 2023, X removed rules prohibiting targeted misgendering and deadnaming transgender people.

Dr. Beare said Meta may be the first of many to adopt these policies, “I think that it is likely that a number of platforms might decide to sort of follow suit and adopt similar sort of resistances to fact-checking and reliance on sort of Community Notes approaches.”

These changes may also lead to users leaving Meta services. “And so I think that’s the big one: that other sort of platforms might follow similar approaches. I think that it also changes people’s understandings of like what these platforms are for and who they are for. And so I think that there will be some interesting movement between different platforms,” Dr. Beare said.

Along with policy changes, Meta has also ended its diversity, equity and inclusion programs, including ones that connected them with students from historically Black colleges and universities, Black-owned businesses and diverse suppliers.

In recent weeks, Zuckerberg, who said recent elections helped prompt these changes, donated $1 million to Donald Trump’s inauguration fund and co-hosted a reception for the inauguration with Republican billionaires.